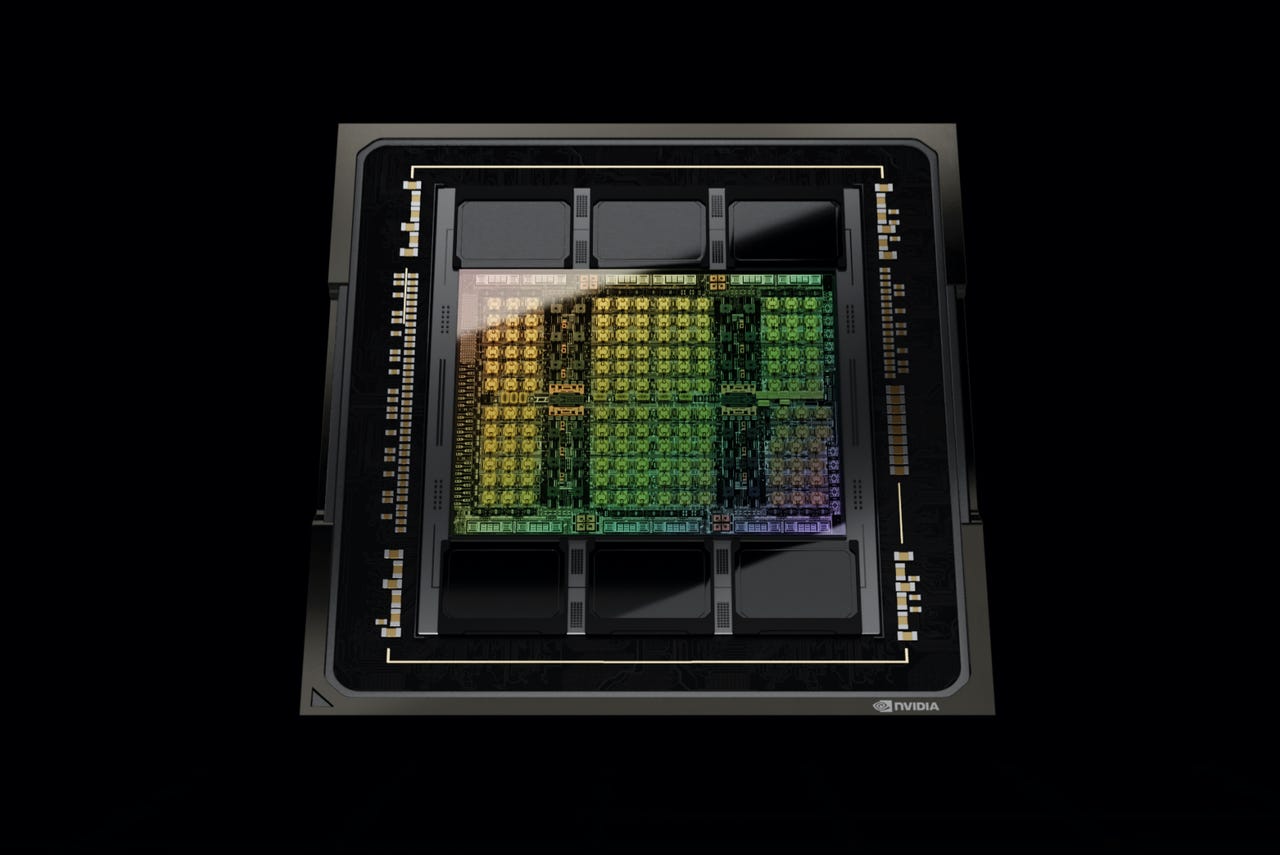

Nvidia's Hopper, its latest generation of GPU design, showed up in the MLPerf benchmark tests of neural net hardware. The chip showed admirable scores, with a single-chip system besting some systems that used multiple chips of the older variety, the A100.

NvidiaIncreasingly, the trend in machine learning forms of artificial intelligence is toward larger and larger neural networks. The biggest neural nets, such as such as Google's Pathways Language Model, as measured by their parameters, or "weights," are clocking in at over half a trillion weights, where every additional weight increases the computing power used.

How is that increasing size to be dealt with? With more powerful chips, on the one hand, but also by putting some of the software on a diet.

On Thursday, the latest benchmark test of how fast a neural network can be run to make predictions was presented by MLCommons, the consortium that runs the MLPerf tests. The reported results featured some important milestones, including the first-ever benchmark results for Nvidia's "Hopper" GPU, unveiled in March.

At the same time, Chinese cloud giant Alibaba submitted the first-ever reported results for an entire cluster of computers acting as a single machine, blowing away other submissions in terms of the total throughput that could be achieved.

And a startup, Neural Magic, showed how it was able to use "pruning," a means of cutting away parts of a neural network, to achieve a slimmer piece of software that can perform just about as good as a normal program would but with less computing power needed.

"We're all training these embarrassingly brute-force, dense models," said Michael Goin, product engineering lead for Neural Magic, in an interview withZDNet, referring to giant neural nets such as Pathways. "We all know there has to be a better way."

The benchmark tests, called Inference 2.1, represent one half of the machine learning approach to AI, when a trained neural network is fed new data and has to produce conclusions as its output. The benchmark measure how fast a computer can produce an answer for a number of tasks, including ImageNet, where the challenge is for the neural network to apply one of several labels to a photo describing the object in the photo such as a cat or dog.

Chip and system makers compete to see how well they can do on measures such as the number of photos processed in a single second, or how low they can get latency, the total round-trip time for a request to be sent to the computer and a prediction to be returned.

In addition, some vendors submit test results showing how much energy their machines consume, an increasingly important element as datacenters become larger and large, consuming vast amounts of power.

The other half of the problem, training a neural network, is covered in another suite of benchmark results that MLCommons reports separately, with the latest round being in June.

The Inference 2.1 report follows a previous round of inference benchmarks in April. This time around, the reported results pertained only to computer systems operating in datacenters and the "edge," a term that has come to encompass a variety of computer systems other than traditional data center machines. One spreadsheet is posted for the datacenter results, another for the edge.

The latest report did not include results for the ultra-low-power devices known as TinyML and for mobile computers, which had been lumped in with data center in the April report.

In all, the benchmarks received 5,300 submissions by the chip makers and partners, and startups such as Neural Magic. That was almost forty percent more than in the last round, reported in April.

As in past, Nvidia took top marks for speeding up inference in numerous tasks. Nvidia's A100 GPU dominated the number of submission, as is often the case, being integrated with processors from Intel and Advanced Micro Devices in systems built by a gaggle of partners, including Alibaba, ASUSTeK, Microsoft Azure, Biren, Dell, Fujitsu, GIGABYTE, H3C, Hewlett Packard Enterprise, Inspur, Intel, Krai, Lenovo, OctoML, SAPEON, and Supermicro.

Two entries were submitted by Nvidia itself with the Hopper GPU, designated "H100," in the datacenter segment of the results. One system was accompanied by an AMD EPYC CPU as the host processor, and another was accompanied by an Intel Xeon CPU.

In both cases, it's noteworthy that the Hopper GPU, despite being a single chip, scored very high marks, in many cases outperforming systems with two, four or eight A100 chips.

The Hopper GPU is expected to be commercially available later this year. Nvidia said it expects in early 2023 to make available its forthcoming "Grace" CPU chip, which will compete with Intel and AMD CPUs, and that part will be a companion chip to Hopper in systems.

Alongside Nvidia, mobile chip giant Qualcomm showed off new results for its Cloud AI 100 chip, a novel accelerator built for machine learning tasks. Qualcomm added new system partners this round, including Dell and Hewlett Packard Enterprise and Lenovo, and it increased the number of total submissions using its chip.

While the bake-off between chip makers and system makers tends to dominate headlines, an increasing number of clever researchers show up in MLPerf with novel approaches that can get more performance out of the same hardware.

Past examples have included OctoML, the startup that is trying to bring the rigor of DevOps to running machine learning.

This time around, an interesting approach was offered by four-year-old, venture-backed startup Neural Magic. The company's technology comes in part from research by founders Nir Shavit, a scholar at MIT, and Alex Matveev, formerly an MIT research scientist and the company's CTO.

The work points to a possible breakthrough in slimming down the computing needed by a neural network.

Neural Magic's technology trains a neural network and finds which weights can be left unused. It then sets those weights to a zero value, so they are not processed by the computer chip.

That approach, called pruning, is aking to removing the unwanted branches of a tree. It is also, however, part of a broader trend in deep learning going back decades known as "sparsity." In sparse approaches to machine learning, some data and some parts of programs can be deemed as unnecessary information for practical purposes.

Neural magic figures out ways to drop neural network weights, the tensor structures that take up much of the memory and bandwidth needs of a neural net. The original network of many-to-many connected layers are pruned till only some connections remained, while the others are zeroed out. The pruning approach is part of a larger principle in machine learning known as sparsity.

Neural MagicAnother technique, called quantization, converts some numbers to simpler representations. For example, a 32-bit floating point number can be compressed into an 8-bit scalar value, which is easier to compute.

The Neural Magic technology acts as a kind of conversion tool that a data scientist can use to automatically find the parts of their neural network that can be safely discarded without sacrificing accuracy.

The benefit, according to Neural Magic's project lead, is not only to reduce how many calculations a processor has to crunch, it is also to reduce how much a CPU has to go outside the chip to external memory, such as DRAM, which slows down everything.

"You remove 90% of the parameters and you remove 90% of the FLOPs you need," said Goin of Neural Magic, referring to "floating-point operations per second," a standard measure of how fast a processor runs calculations.

In addition, "It's very easy for CPUs to get memory bandwidth-limited," Goin said. "Moving large tensors requires a lot of memory bandwidth, which CPUs are bad at," noted Goin. Tensors are the structures that organize values of neural network weights and that have to be retained in memory.

With pruning, the CPU can have advantages over a GPU, says Neural Magic. On the left, the GPU has to run an entire neural network, with all its weights, in order to fill the highly parallelized circuitry of the GPU. On the right, a CPU is able to use abundant L3 cache memory on chip to run tensors from local memory, known as tensor columns, without accessing off-chip DRAM.

Neural MagicNeural Magic submitted results in the datacenter and edge categories using systems with two Intel Xeon 8380 chips running at 2.3 gigahertz. The category Neural Magic chose was the "Open" category of both datacenter and edge, where submitters are allowed to use unique software approaches that don't conform to the standard rules for the benchmarks.

The company used its novel runtime engine, called DeepSparse, to run a version of the BERT natural language processing neural network developed by Google.

By pruning the BERT network, the vastly reduced size of the weights could be held in the CPU's local memory rather than going off-chip to DRAM.

Modern CPUs have capacious local memory known as caches that can store frequently used values. The so-called Level 3 cache on most server chips such as Xeon can hold tens of megabytes of data. The Neural Magic DeepSparse software cuts the BERT program from 1.3 gigabytes in file size down to as little as 10 megabytes.

"Now that the weights can be pretty small, then you can fit them into the caches and more specifically, fit multiple operations into these various levels of cache, to get more effective memory bandwidth instead of being stuck going out to DRAM," Goin toldZDNet.

The DeepSparse program showed dramatically higher numbers of queries processed per second than many of the standard systems.

Compared to results in the "Closed" version of the ResNet datacenter test, where strict rules of software are followed, Neural Magic's single Intel CPU topped numerous submissions with multiple Nvidia accelerators, including from Hewlett Packard Enterprise and from Nvidia itself.

In a more representative comparison, a Dell PowerEdge server with two Intel Xeons handled only 47.09 queries per second while one of the Neural Magic machines was able to produce 928.6 queries per second, an order of magnitude speed-up.

Neural Magic's Intel Xeon-based system turned in results an order of magnitude faster than a Dell system at the same level of accuracy despite having 60% of the weights of the BERT neural network zeroed out.

MLCommonsThe version of BERT used by Neural Magic's DeepSparse had 60% of the weights removed, with ten of its layers of artificial neurons zeroed-out, leaving only 14, while the Dell computer was working on the standard, intact version. Nevertheless, the Neural Magic machine still produced predictions that were within 1% of the standard 99% accuracy measure for the predictions.

Neural Magic described its ability to prune the BERT natural langauge processing program to varying degrees of sparsity.

Neural MagicNeural Magic has published its own blog post describing the achievement.

Neural Magic's work has broad implications for AI and for the chip community. If neural networks can be tuned to be less resource-hungry, it may provide a way to stem the ever-increasing power budget of machine learning.

"When you think about the real cost of deploying a box to do inference, there's a lot to be done on the runtime engine, but there's even more to be done in terms of the machine learning community, in terms of getting ML engineers and data scientists more tools to optimize their models," Goin toldZDNet.

"We have a big roadmap," said Goin. "We want to open up optimization to more people."

"If we can prune a model to 95% of its weight, why isn't everyone doing this?" said Goin.

Sparsity, said Goin, is "going to be like quantization, it's going to be something that everyone picks up, we're just on the edge of it."

For the chip industry, the fact that Neural Magic was able to show off benefits of X86 chips means that many more kinds of chip approaches could be viable for both inference and training. Neural Magic earlier this year partnered with Advanced Micro Devices, Intel's biggest competitor for x86 CPUs, showing that the work is not limited to just Intel-branded chips.

The scientists at Intel even turned to Neural Magic last year when they set out to produce pruned models of BERT. In a paper by Ofir Zafrir and colleagues at Intel Labs in Israel, the Neural Magic approach, called "Gradual Magnitude Pruning," was combined with an Intel approach called "Learning Rate Rewinding." The combination of the two resulted in minimal loss in accuracy, the authors reported.

Goin expects Neural Magic will add ARM-based systems down the road. "I'd love to be able to do an MLPerf submission right from this MacBook Pro here," said Goin, referring the the Mac's M-series silicon, which uses the ARM instruction set.

Neural Magic, currently almost 40 people, last year raised$30 million in venture capital and has a "runway into 2024," according to Goin. The company monetizes its code by selling a license to use the DeepSparse runtime engine. "We see the greatest interest for things such as natural language processing and computer vision at the edge," said Goin.

Retail is one really big prospective area for use cases, said Goin, as are manufacturing and IoT applications. But the applicability is really any number of humble systems out there in the world that don't have fancy accelerators and may never have such hardware. "There are industries that have been around for decades that have CPUs everywhere," observed Goin. "You go in the back room of a Starbucks, they have a rack of servers in the closet."

Among other striking firsts for MLPerf, cloud giant Alibaba was the first and only company to submit a system comprised of several machines in what is usually a competition for single machines.

Alibaba submitted five systems that are composed of variations of two to six nodes, running a mix of Intel Xeon and Nvidia GPUs. An Alibaba software program, called Sinian vODLA, automatically partitions the tasks of a neural network across different processors among the different computers.

The most striking feature is that the Sinian software can decide on the fly to apportion tasks of a neural net to different kinds of processors, including a variety of Nvidia GPUs, not just one, so that the difference in abilty of each processor is not an obstacle but a potential advantage.

"This is the future, heterogenous computing," said Weifeng Zhang, who is Alibaba's chief scientist in charge of heterogenous computing, in an interview withZDNet.

The benchmark results from the Alibaba Cloud Server showed some eye-popping numbers. On the BERT language task, a four-node system with 32 Nvidia GPUs in total was able to run over 90,000 queries per second. That is 27% faster than the top winning submission in the Closed category of data center machines, a single machine from Inspur using 24 GPUs.

An Alibaba cloud computer consisting of four separate computers operating as one, top, was able to marshall 32 Nvidia GPUs to produce results twenty-seven percent faster than the top single system, a 24-chip machine from Inspur.

MLCommons"The value of this work can be summed up as easy, efficient, and economical," Zhang toldZDNet.

On the first score, ease of use, "we can abstract away the heterogeneity of the computing [hardware], make it more like a giant pool of resources" for clients, Zhang explained.

How to make multiple computers operate as one is an area of computer science that is showing renewed relevance with the rise of the very large neural networks. However, developing software systems to partition work across many computers is an arduous task beyond the reach of most industrial users of AI.

Nvidia has found ways to partition its GPUs to make them multi-tenant chips, noted Zhang, called a "MIG," a multiple-instance GPU.

That is a start, he said, but Alibaba hopes to go beyond it. "MIG divides the GPU into seven small components, but we want to generalize this, to go beyond the physical limitations, to use resource allocation on actual demand," explained Zhang.

"If you are running ResNet-50, maybe you are using only 10 TOPs of computation," meaning, trillions of operations per second. Even one of the MIGs is probably more than a user needs, he said. "We can make a more fine-grained allocation," with Sinian, "so maybe a hundred users can use [a single GPU] at a time."

On the second point, efficiency, most chips, noted Zhang, rarely are used as much as they could be. Because of a variety of factors, such as memory and disk access times and bandwidth constraints, GPUs sometimes get less than 50% utilization, which is a waste. Sometimes, that's as low as 10%, noted Zhang.

"If you used one machine with 8 PCIe slots" connecting chips to memory, said Zhang, "you would being use less than 50% of your resources because the network is the bottleneck." By handling the networking problem first, "we are able to achieve much higher utilization in this submission."

Perhaps just as important, as machines scale to more and more chips, power consumption in a single box becomes a thorny issue. "Assume you can build 32 slots on your motherboard" for GPUs, explained Zhang, "you may get the same result, but with 32 PCIe slots on the motherboard, your power supply will triple."

That's a big issue as far as trying to achieve green computing, he said.

The third issue, economics, pertains to how customers of Alibaba get to purchase instances. "This is important for us, because we have a lot of devices, not just GPUs, and we want to leverage all of them," said Zhang. "Our customers say they want to use the latest [chips], but that may not be exactly what they need, so we want to make sure everything in the pool is available to the user, including A100 [GPUs] but also older technology."

"As long as we solve their problem, give them more economical resources, this will probably save more money for our clients - that's basically the main motivation for us to work on this."

If you'd like to dig into more details on the Alibaba work, a good place to start is a deck of slides that Zhang used to June to give a talk at the International Symposium on Computer Architecture.

The Alibaba work is especially intriguing because for some time now, specialists in the area of computer networking have been talking about a second network that sits behind the local area network, a kind of dedicated AI network just for deep learning's massive bandwidth demand.

In that sense, both Alibaba's network submission, and Neural Magic's sparsity, are dealing with the over-arching issue of memory access and bandwidth, which many in the field say are far greater obstacles to deep learning than the compute part itself.

Tags quentes :

Inteligência artificial

Inovação

Tags quentes :

Inteligência artificial

Inovação