Image: Meta

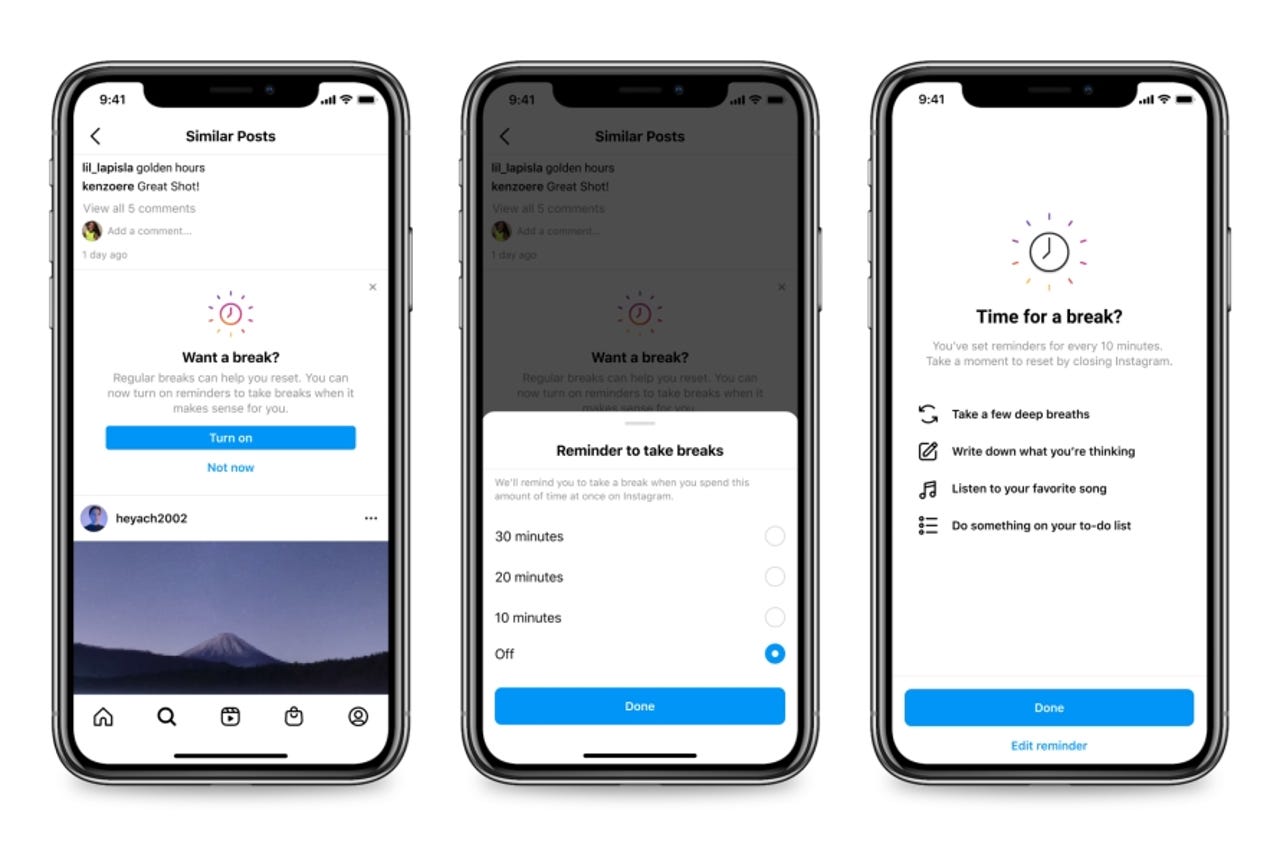

Image: Meta Instagram has announced it will be launching a new feature designed to nudge people who have been scrolling on its platform for a certain amount of time to take a break as part of what the company is calling its "ongoing youth safety work".

The Take A Break feature, which has been in testing since November, will be initially available in the US, UK, Ireland, Canada, Australia, and New Zealand, with plans to roll it out globally in the coming months.

Users will be able to turn on the feature and select to be alerted to take a break after using the platform for 10 minutes, 20 minutes, or 30 minutes, before being provided tips on how to reset.

According to Instagram head Adam Mosseri, the Take a Break reminders have been built using the platform's existing time management tools, like Daily Limit, which lets people know when they've reached the total amount of time they want to spend on Instagram each day, and offers the ability to mute Instagram notifications after reaching the set threshold.

He explained that the tool is part of a broader effort by its parent company, Meta, to protect teens.

"Every day I see the positive impact that Instagram has for young people everywhere. I'm proud that our platform is a place where teens can spend time with the people they care about, explore their interests, and explore who they are," Mosseri wrote in a blog post.

"I want to make sure that it stays that way, which means above all keeping them safe on Instagram. We'll continue doing research, consulting with experts, and testing new concepts to better serve teens."

Additionally, Instagram said it will be introducing tools in March that allow parents and guardians to view how much time their teens spend on Instagram and set limits, as well as give teens the options to notify their parents if they report someone. An educational hub for parents and guardians that will include product tutorials and tips to help them discuss social media use with their teens will also be introduced.

Other features in the pipeline, according to the company, include taking a "stricter approach" to what it recommends to teens by actively nudging them towards different topics if they've been dwelling on one topic for a while and stopping people from tagging or mentioning teens who do not follow them.

The move by the Meta-owned company comes as governments worldwide crack down on social media platforms and the potentially harmful content that is being shared via these platforms, as well as the impact these materials have on the mental health and wellbeing of users, particularly young people.

Last week, the Australian government said it would commence a parliamentary inquiry to scrutinise major technology companies and the "toxic material" that resides on their online platforms.

Prime Minister Scott Morrison said the new inquiry would build on the proposed social media legislation to "unmask trolls".

"Big tech created these platforms, they have a responsibility to ensure their users are safe," he said. "Big tech has big questions to answer. But we also want to hear from Australians; parents, teachers, athletes, small businesses and more, about their experience, and what needs to change."

The move by Australia follows in the footsteps of the US where a bipartisan group of state attorneys-general announced they were launching a nationwide investigation into Meta to examine whether the company has violated state consumer protection laws and put the public at risk.

Among other things, the investigation will examine whether Meta provides and promotes its social media platform Instagram to children and teens, despite knowing that such use is associated with physical and mental health harms. It will also target the techniques used by Meta to increase the frequency and duration of engagement by teens, and the alleged result harms caused by such extended engagement.

Over in the UK, a draft Online Safety Bill is being considered by Parliament. The Bill, which was put forward by government earlier in the year, proposes to force companies to protect their users from harmful content ranging from revenge porn to disinformation, through hate speech and racist abuse.

As part of the investigation, the UK Parliament heard from Facebook whistleblower Frances Haugen who said that social media platforms using opaque algorithms to spread harmful content should be reined in, otherwise they may trigger a growing number of violent events such as the attacks on the US Capitol Building that occurred last January.

Providing evidence to the UK Parliament followed shortly after Haugen had declared to a US Senate inquiry into Facebook's operation the company was "morally bankrupt," and casting "the choices being made inside of Facebook" as "disastrous for our children, our privacy, and our democracy."

Tags quentes :

Negócio

Redes Sociais

Tags quentes :

Negócio

Redes Sociais