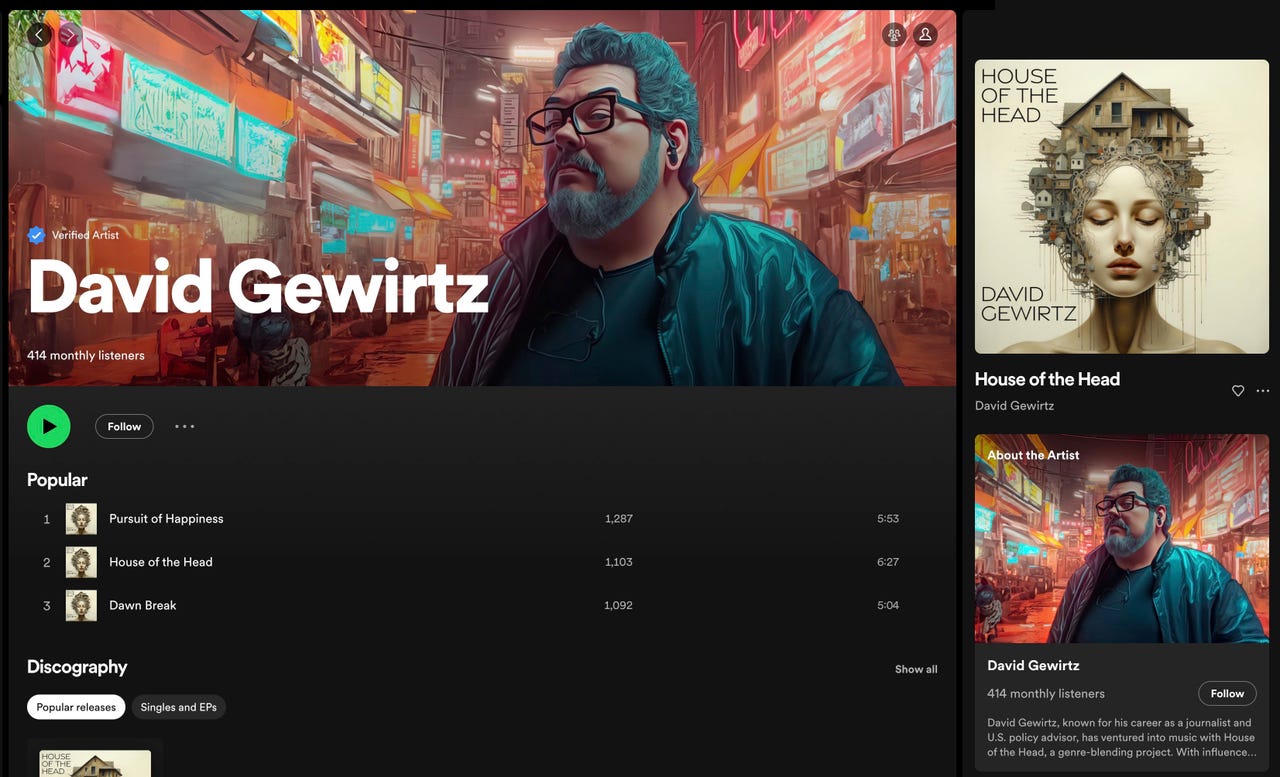

My music is now on Spotify, Apple Music, Amazon Music, and all the rest.

Screenshot by David Gewirtz/Some time ago, during a particularly dark time in my life, I found some solace in composing music that reflected my various moods.After that, the tracks sat, all 12 of them, waiting for -- I don't know --something.

Many musicians have similar experiences. They create music, write songs, and compose tunes, but never really get them "out there."

Back in the day, the only ways you could share your music were through live performances, a self-published CD shared with all ten of your friends, or getting signed by a record label (a very high barrier of entry rife with considerable difficulty and risk).

Also: How I used ChatGPT and AIart tools to launch my Etsy business fast

But all that has changed. You may not realize it, but Americans still buy CDs and vinyl (about half "like to purchase physical copies of their music"). That said, music streaming services have become popular and even more mainstream than physical album sales.

Launching a new music album or EP no longer requires catching the eye of an A&R (artists and repertoire) executive at a record label. You can use a digital music distributor to push your music out onto streaming services without having to be considered marketable enough by some record executive, which really helps if your music doesn't fit neatly into a popular genre.

This really is a golden age for indy music, because music releases are no longer solely dependent on the approval of mainstream sound gatekeepers. Anyone can launch an album and get it onto Spotify, Apple Music, Amazon Music, iTunes, Deezer, Tidal, and the rest.

So I did. And I used AI to help me make it happen.

AI wasnotused for:

AI wasused for:

Let me be clear. While I did use my computer to mix licensed sound samples and compose my own work, I did not use an AI to create or mix my music. My music is all me. That's important because I want my listeners (dare I say, fans) to know that what's on those tracks represents my skills, feelings, heart, and soul.

Also: How does ChatGPT actually work?

I did dabble with, and then discard, the idea of using an AI to help master the music. Mastering is the process where an audio engineer takes your mix andzhuzhes it up, making everything match, sound right, get the best balance, and sometimes even pull more from the music than the original composer was able to mix in originally.

A good sound engineer is worth their weight in gold. So, of course, some companies have tried to create AIs to reduce the process to a few clicks.

To help me choose which mastering service to try, I watched these folks compare a number of automated mastering tools. They determined thatLANDR was the best of the AI tools, so that's the one I tried.

Also: How to improve the quality of Spotify streaming audio

I'm certainly not a sound engineer, and while anyone's music can probably benefit from the wisdom and skills of a deeply experienced human sound engineer, I write about AI. So I plunked down$40 forLANDR's premium mastering plan and gave it a shot.

While the UI for LANDR was fine, I found the results to be maddening. Did it sound better? Did it sound worse? Did I have a good enough ear to tell the difference?

LANDR lets you choose some options for how it will process your master, so you're essentially guiding the AI through something you don't otherwise know all that well.

Then, if you're not sure you like the sound, you can use some refinement controls to further post-process the sound.

If this is AI, it's definitely a lazy one. I want my sound engineer (or even my AI sound engineer) to make the decisions. If I have to make all the sound decisions, then what's its real value?

I do believe a few of the tracks were improved...slightly. But my main, favorite tracks were clearly made muddier. I agonized over this for almost two weeks when, after sleeping on it, I finally came to the answer that was right for me.

I wanted the music to bemymusic. Especially in this time of generative AI, and especially since I work a lot with AI, I didn't want any implication that some machine wrote my music. So, if I wanted to be able to say, "No AI was used in the creation or mixing of this music," then AI mastering was not right for me.

Also: How to write better AI prompts

That said, while no AI was used in the creation or mixing of this music, I used the heck out of AI for the creation of album covers, website graphics, and related promotional text.

The first step for which I turned to AI was in creating the album covers. In fact, it was the AI's work product that helped me refine the release strategy for my music.

I have 12 tracks completed, which is enough for a full album of music. Back in the days before social media, you'd want to release an entire album, especially if you were an indy musician.

But today, it's better to release music in what's called a waterfall release strategy. The idea is you do successive releases of singles, in order to get more play on social media and on digital platforms.

Also: How to use Midjourney to generate amazing images and art

I tried getting Midjourney to create covers for all 12 tracks, but I wasn't happy with some of them. On the other hand, I was ecstatic about four of them, as well as for what will eventually become the cover of the full album itself.

So I decided to release four EPs, three months apart, each with three songs. Each EP (extended play) will use one of the covers generated by Midjourney. Then after all four EPs and all 12 songs are released separately, I'll combine them into one LP album using another image generated by Midjourney.

The first of these EPs is "House of the Head." My prompt for Midjourney was simple. I just typed in:

/imagine house of the head

Also: The best AI art generators: DALL-E 2 and fun alternatives to try

I got back a variety of results:

Eventually, I settled on this cover (I added the text in Photoshop), which was compelling and reflected a lot of what I wanted to showcase not only in the song, but in the three tracks of this first EP.

There are four additional images I'll be using over the next year, but you'll have to wait and see those when they are released with the music.

When you submit music to a distributor, streaming service, and even for promotion, you're supposed to know what genre the tunes fall into. I had no idea. I tend to like the music I like, but I've never really paid attention to the music genre. The last music class I took was music appreciation, back in junior high.

Also: ChatGPT's Custom Instructions feature is now available for everyone

But I did know the instruments used to create the various sounds I incorporated into my compositions. I fed a list of their names to ChatGPT Plus with the WebPilot add-on and asked this:

You are a music A&R executive. You like an album that uses rain sticks, flutes, cymbals, trumpets, saxophones, trombones, bass, electric, and acoustic guitar, congas, Tibetan bowls and chimes, rawhide shakers, an autoharp, a darbuka, a panpipe, and the one string Afro-Brazilian bow called the berimbau.

You'll also hear a wide variety of synthesizer effects, a Steinway Grand Piano, and even the sounds of rain and thunder.

List the two main genres and a sub-genre for each main genre music with these influences will be cataloged under. Use WebPilot if needed.

From that, I got back the following recommendation:

Is it perfect? Probably not. If I really were an A&R executive, I'd be able to fit the tunes into their proper categories. But since I'm no expert, the AI did give me a leg up. The songs were accepted, and so far, the playlist curators who've looked at the songs didn't feel they were in the wrong genres, so I'll call this another win for the AI.

My next challenge was describing the music itself. Again, I turned to ChatGPT. This time, I fed it the instrument list above and then asked it this:

Imagine you're writing a feature article for a music magazine about the album 'House of the Head'. Describe the unique sound and musical experience the album offers, considering the wide array of instruments and the blend of electronica, worldbeat, and orchestral music.

And here's what it told me:

If you plan to promote your music, you're going to need a bio. Now, as it turns out, I have a bunch of bios. I have my very pompous primary professional bio, and I've also had to create bios focused on certain areas of my work for various clients and speaking gigs over the years. But, without a doubt, none of them was suitable as a musician bio.

Also: 7 advanced ChatGPT prompt-writing tips you need to know

I wasn't entirely sure how to write a musician bio, but I knew that many artists on Spotify had them listed there. So I found three from artists I like listening to and captured their bios into a text file. Then I created this first of two queries:

I am presenting you with three example artist bios. Each individual bio is enclosed in >>> <<<. Analyze all three bios and discuss the characteristics common across all of them that make them particularly suited to describe musicians and artists.

I then embedded the three bios in >>><<< blocks and fed it all to ChatGPT. This is what I got back:

That's incredibly useful. After, I fed it both the music description from above, along with my long and pompous professional bio. Then I asked it this:

Now, take the professional bio and music description for David Gewirtz, and using the criteria and style identified in the musician bio format, write an artist's bio for David Gewirtz.

You can see the result on the House of the Head website, on the bio page. Since I used AI for nearly all the graphics on my music website, it's probably fair to point you there now.

We'll be spending some more time there next.

Most musicians need a website for promotion. It's a place where all the information needed by journalists, fans, and playlist curators can go to find out about your music.

At this point, there are three main ways that AI can help with website creation. The first is creating the actual site's structure and look. The second is helping with the prose. The third is helping with images.

In preparation for this project, I looked at more than 10 vendors that offer AI-based website creation and I was very unimpressed. Most asked a few questions and then created a site based on the answers to the questions.

Also: ChatGPT Plus can mine your corporate data for powerful insights. Here's how

But the sites seemed very canned. I wouldn't be surprised if vendors created 50 or so pre-built templates for the most common site categories and then just generated the site from the pre-built template, calling it "AI".

That's why I'm not naming names. I expect that this area will see improvement eventually, but unless you want something very basic and fairly generic, AI website generation is not ready for prime time -- yet.

For now, I decided it would be easier simply to build my own site using WordPress and a theme (I used Divi from Elegant Themes) and host the site on one of the servers I'm already paying for. For the record, I chose Divi because I've previously used it on another site, it's pretty good, and I have an already-paid license for it. There are certainly other excellent WordPress themes and even some that are musician focused.

Here is where I relied very heavily on AI tools. You saw how I used ChatGPT to create a musician's bio. That's one page of the site, but it's an important page.

I used AI to create all of the site's original images. This includes the home page hero image:

Both the hero image and the album image were created in Midjourney. Then the final image was composited in Photoshop and the text added in Photoshop as well.

Screenshot by David Gewirtz/I also used AI to create the site's wide banner, as well as two more spotlight images on the bio page. There's a lot to unpack here, so I'm going to break things out into their own sections.

I have been notoriously camera-shy. This is odd for a guy who appears in a couple of hundred YouTube videos and spent a good part of the 2010s splattered all over network TV doing guest commentary, but it's true. In my younger days, if there was a camera at an event, I went the other way. As such, there are very few pictures of me as a younger man.

Also: 7 advanced ChatGPT prompt-writing tips you need to know

Back in the day, I was required to take a few publicity stills for work, and I did sit in front of a photographer on a few occasions. It was a difficult experience for everyone involved, and the pictures were mostly mediocre. I did get one good shot of me looking over sunglasses with a flag behind me, but that was taken more than a decade ago, back when I was doing a lot more work as a political pundit.

Recently, my way of generating images of myself has been to grab stills from my YouTube videos. This works well for tech-related images, like my Facebook profile picture. But musicians are supposed to have a lot more style.

Techie David could get away with being charmingly geeky. But musician David had to be cool.

Actually, this is worth a minute of serious discussion. Music imagery is both an art and a science. If you look at the publicity photos of most musicians, they sure don't reflect how the artists look before coffee on a Saturday morning. Instead, they're often stage or studio shots that are carefully crafted to project an image and a specific feeling.

Also: How (and why) to subscribe to ChatGPT Plus

They're meant to convey an impression of the artist that's not exactly tied to their day-to-day real life. For my album and future releases, I needed images that weren't reflective of the everyday me. I needed stylized images that fit the musician vibe.

To pull it off, I spent a lot of time in Midjourney, with the help of Insight FaceSwap and Adobe Generative Fill.

Midjourney allows you to upload an image, which the tool will then incorporate into its AI generation of new images. I started with this basic image of me talking into a mic, which I've been using as my social media profile image.

You upload an image into Midjourney by clicking the plus button. Once the image is uploaded, you right-click on it to get the URL, and then you paste that URL after the /imagine command in Discord (Midjourney lives inside Discord, as do a few other AI tools), followed by whatever prompt you want.

It took a lot of tries. Here are a bunch I ruled out.

You need a pretty thick skin and a sense of humor to do this with Midjourney. Many other results were even weirder.

Screenshot by David Gewirtz/But then I just appended cyberpunk after the URL and I got this.

It was perfect. It somehow (I'm sure it was random chance) picked a leather jacket that looks almost exactly like the one I've been wearing for a decade now. This image became the main image on my music site, and my profile avatar for the various streaming services that require you to specify an artist image.

At the top of each page is a wide banner. The original Midjourney image just isn't that wide.

But I loaded the image into Photoshop, added more canvas space on either side of the original images, and Photoshop Generative Fill gave me a much wider image.

Also: How to use Photoshop's Generative Fill AI tool to easily transform your boring photos

Sweet.

Since my music bio talked about my techie roots, I decided that it needed a picture of me with a keyboard. Rather than starting with the previous radio show image I used to generate my hero image, I used the Midjourney URL of the actual hero image itself. I then fed Midjourney a ton of prompts until I arrived at:

/imagine typing on qwerty computer keyboard, cyberpunk, lightning, data center --ar 9:16

The -ar sets aspect ratio, which got me the tall image.

Like me, but not me.

Screenshot by David Gewirtz/The image above was fine, except it wasn't my face. To fix that, I used Insight FaceSwap. I uploaded another image of me that showed my face pretty well:

The real me. After coffee.

David Gewirtz/Then, using the /swapid command, I uploaded the hacker image from above and let FaceSwap do its magic. It returned this. It's subtle, but this is much more me than the previous image.

The original is on the left. The face match version is on the right. Because the one on the left is also based on my face, they're similar. But the one on the right is a bit more me.

Screenshot by David Gewirtz/I wanted one more image on the bio page -- a picture of me with my car. I drive a red Dodge Challenger, which I earned the right to drive by virtue of successfully passing into midlife while managing an ongoing stream of crisis experiences.

Using the same seed image technique, I told Midjourney this:

/imagine standing in front of red dodge challenger, cyberpunk

And I got back this:

It's close, but needs work.

Screenshot by David Gewirtz/As you can see, it's not really my face. And that hair. That was most definitelynotmy hair.

To fix it, I first used FaceSwap to put my face on my body. Then I saved the image onto my desktop, opened it in the Photoshop beta, and selected the area with the hair. Clicking the Generative Fill button, I told Photoshop to give me curly hair. I also had Photoshop Generative Fill clean up the street a bit and widen the image to fit the web page I wanted to use it on.

Also: These 3 AI tools made my two-minute how-to video way more fun and engaging

It took about 12 attempts, but then I got this image, which wasn't bad. All I did to tweak it was to add a bit of gray, and it now much more closely represents what I look like:

Before on the left, after on the right. The guy on the right is pretty much me. The guy on the left, not so much.

Screenshot by David Gewirtz/And here's how the final image came out:

Notice that there's more street on both the left and right sides of the image. That's Photoshop Generative Expand in action.

Screenshot by David Gewirtz/I know I just gave you a really fast description of how to do a face match, which is a problem many Midjourney users are trying to solve. Stay tuned. This article is too long for an extra in-depth how-to, but I plan to produce a guide on getting a perfect face match using Midjourney AI and FaceSwap.

The interesting secret about the music business now compared to the 1990s and earlier is that distribution today is disintermediated on a mammoth scale. Streaming services make it possible for independent artists to reach a worldwide audience, mostly without gatekeepers adjudicating suitability for some marketing strategy or another.

The key to all of this is a category of cloud service called "music distribution services." For a shockingly nominal fee (as little as$10), you can get a track uploaded to all of the major services. I paid$49 toCD Baby to distribute my EP, and they did just that. It's now on Spotify, Apple Music, Amazon Music, and about 150 other streaming platforms.

Also: I used ChatGPT to rewrite my text in the style of Shakespeare, C3PO, and Harry Potter

Everything I've done in getting my EP out could have been done without an AI's help. I have a ton of product marketing experience (back in the day I was a product marketing director for a major software company). But while product marketing and producing music are similar trades, there is a lot of domain-specific music industry knowledge I didn't have going into this process. More than that, there's also the music industry jargon.

To become a verified artist on Spotify, for example, it was up to me to properly fill out all the forms, sign up for the artist account, and curate the music. I also went to Copyright.gov to register my copyrights online, and signed up with a performing rights organization (PRO) so royalties on radio play will be collected. There is still a lot of product management work to getting music out, but if you're willing to do the work, you can get distribution.

For a shockingly nominal fee (as little as$10), you can get a track uploaded to all of the major services.

Screenshot by David Gewirtz/The next step was promotion. I'm using a service called Groover to introduce my tracks to playlist curators, who then decide if they want to add my music to their playlists. So far, four such curators have added my tracks to their playlists, which means I'll be able to reach listeners beyond my circle of friends and social media followers.

As a good researcher, I was able to pick up the procedural knowledge necessary to get the music out. But ChatGPT and Midjourney were able to provide the stylistic notes for the project I wouldn't have otherwise been able to reproduce.

Also: Apple Music finally adds personalized recommendations, while Spotify expands AI DJ

While there's always some concern about AI usage, it's clear I wouldn't have done this project -- at least at this time -- without the help of the AI-generated analysis, images, and descriptions.

Over the next year, I intend to make four more "waterfall" releases (three more EPs and the full 12-track album). I composed all the tracks quite some time ago. Most of the descriptions, and all of the art, have already been generated by the AI tools.

And with that, the first wave of this project is done. If you want to listen to any of these tracks, point your browser to House of the Head and click directly into the music service of your choice.

Also: Everything you need to start a podcast: The best microphones, headphones, and software

So, does this project give you any ideas? Have you been wanting to distribute music and now have a better roadmap? Let me know in the comments below.

Disclaimer: Using AI-generated images could lead to copyright violations, so people should be cautious if they're using the images for commercial purposes.

You can follow my day-to-day project updates on social media. Be sure to subscribe to my weekly update newsletter on Substack, and follow me on Twitter at @DavidGewirtz, on Facebook at Facebook.com/DavidGewirtz, on Instagram at Instagram.com/DavidGewirtz, and on YouTube at YouTube.com/DavidGewirtzTV.

Tags quentes :

Nosso processo

Inteligência artificial

Inovação

Tags quentes :

Nosso processo

Inteligência artificial

Inovação