The world of open-source software continues to let companies distinguish themselves from generative AI giants like OpenAI and Google. On Wednesday, data warehousing cloud vendor Snowflake announced an open-source AI model the company says can be more efficient than Meta's recently introduced Llama 3 at enterprise tasks, such as SQL coding for database retrieval.

Also: AI21 and Databricks show open source can radically slim down AI

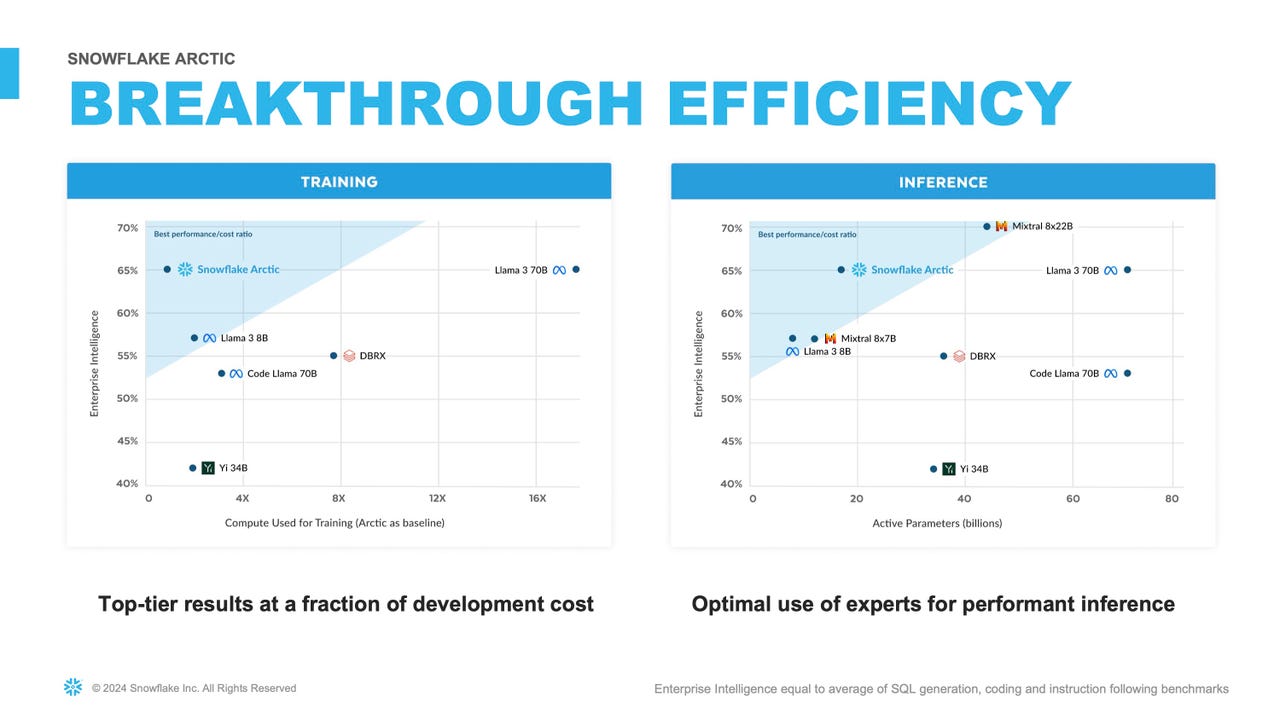

The large language model (LLM), called Arctic, is "on par or better than both Llama 3 8B and Llama 2 70B on enterprise metrics, while using less than half of the training compute budget," Snowflake claims on its GitHub repository.

(An "AI model" is the part of an AI program that contains numerous neural net parameters and activation functions that are the key elements for how an AI program functions.)

Snowflake has released all of Arctic's parameters and model code under the Apache 2.0 open-source license, along with the "data recipe" for training proposed by the company, and a collection of research insights. A Hugging Face repository is available as well.

The Artic program is available immediately from Snowflake as an inference service on its Cortex offering. Artic will be available on Amazon AWS, Hugging Face and other venues, said Snowflake.

"This is a watershed moment for Snowflake, with our AI research team innovating at the forefront of AI," Snowflake CEO Sridhar Ramaswamy said in prepared remarks.

Snowflake emphasizes Arctic's ability to hold its own against Llama 3 and DBRX, not simply on enterprise tasks but also on common machine learning benchmarks such as the "MMLU" textual understanding task:

Similarly, despite using 17x less compute budget, Arctic is on par with Llama3 70B in enterprise metrics like Coding (HumanEval+ & MBPP+), SQL (Spider) and Instruction Following (IFEval). It does so while remaining competitive on overall performance, for example, despite using 7x less compute than DBRX it remains competitive on Language Understanding and Reasoning (a collection of 11 metrics) while being better in Math (GSM8K).

Snowflake has yet to publish a formal paper, but the company has offered some technical details on GitHub. The approach taken by Snowflake's AI leads -- Yuxiong He, Samyam Rajbhandari, and Yusuf Ozuysal -- is similar to an approach taken recently by database vendor Databricks with its DBRX LLM and by AI startup AI21 Labs with its Jamba LLM.

Also:How Meta's Llama 3 will be integrated into its AI assistant

The approach combines a traditional transformer attention-based model with what's called a "mixture of experts" (MoE), an LLM approach that shuts off some of the neural weights to conserve computing and memory needs. MoE is among the tools that Google used for its recent Gemini LLM.

Snowflake calls its variant a "Dense - MoE Hybrid Transformer" and describes the work as follows:

Arctic combines a 10B dense transformer model with a residual 128x3.66B MoE MLP resulting in 480B total and 17B active parameters chosen using a top-2 gating. For more details about Arctic's model Architecture, training process, data, etc.

In place of a formal paper, Snowflake has published two blog posts to discuss the approach and the training procedure as part of an expanding Arctic Cookbook webpage.

There is also a demo of Arctic providing inference, meaning, making predictions, in the form of a chat prompt, on the Hugging Face repository.

Also: I tested Meta's Code Llama with 3 AI coding challenges that ChatGPT aced - and it wasn't good

When asked the Arctic prompt to explain the difference between the two versions of Arctic, "base" and "instruct," it produced a decent summary in a snap, noting that "Arctic Instruct is a variant of the Arctic Base model that is specifically designed for instruction following and task-oriented conversations."

Arctic follows Snowflake's release earlier this month of a family of "text-embedding models," which excel in determining how words are grouped together for retrieval, as used for search.

Tags quentes :

Inovação

Tags quentes :

Inovação