Image: Microsoft

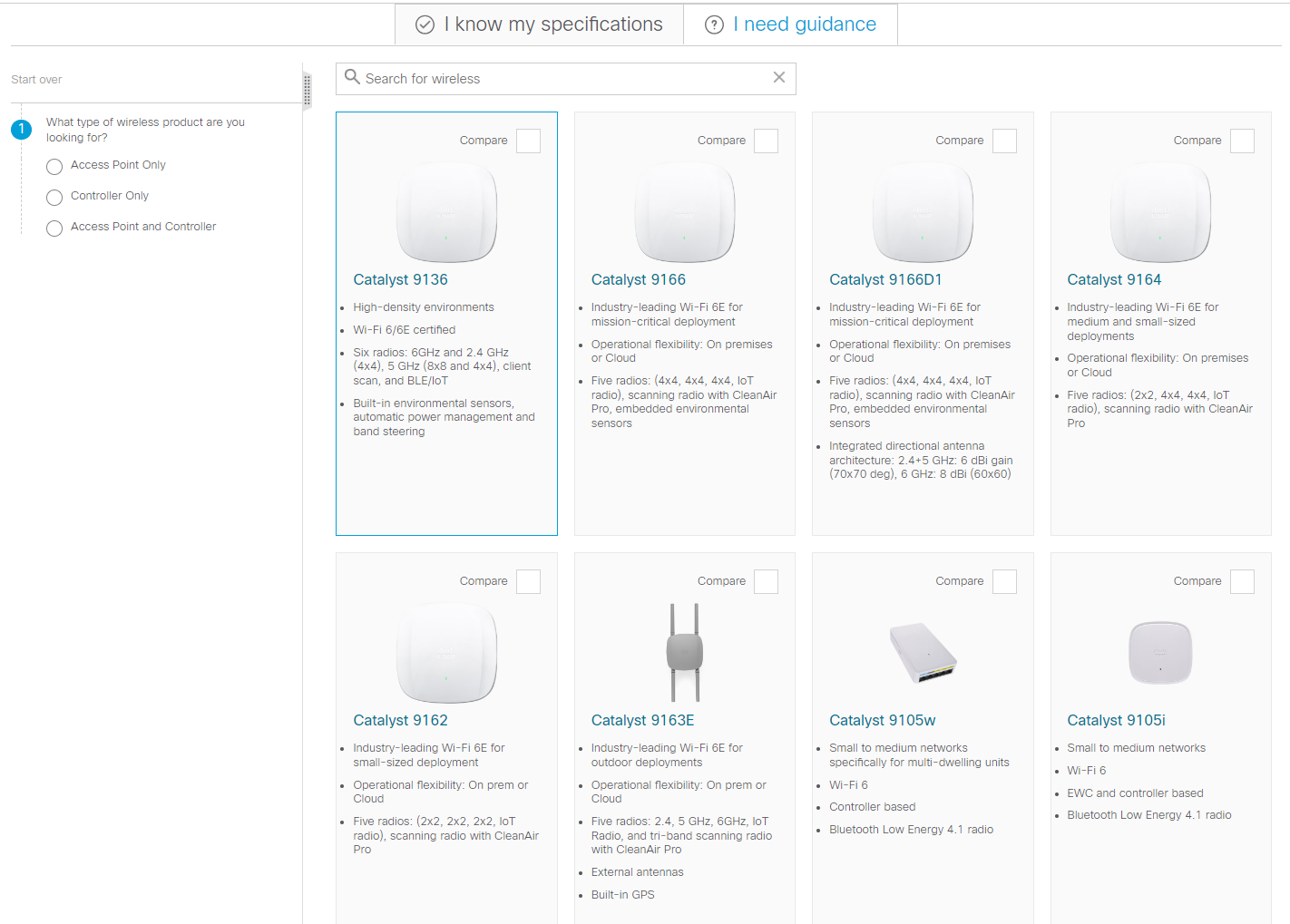

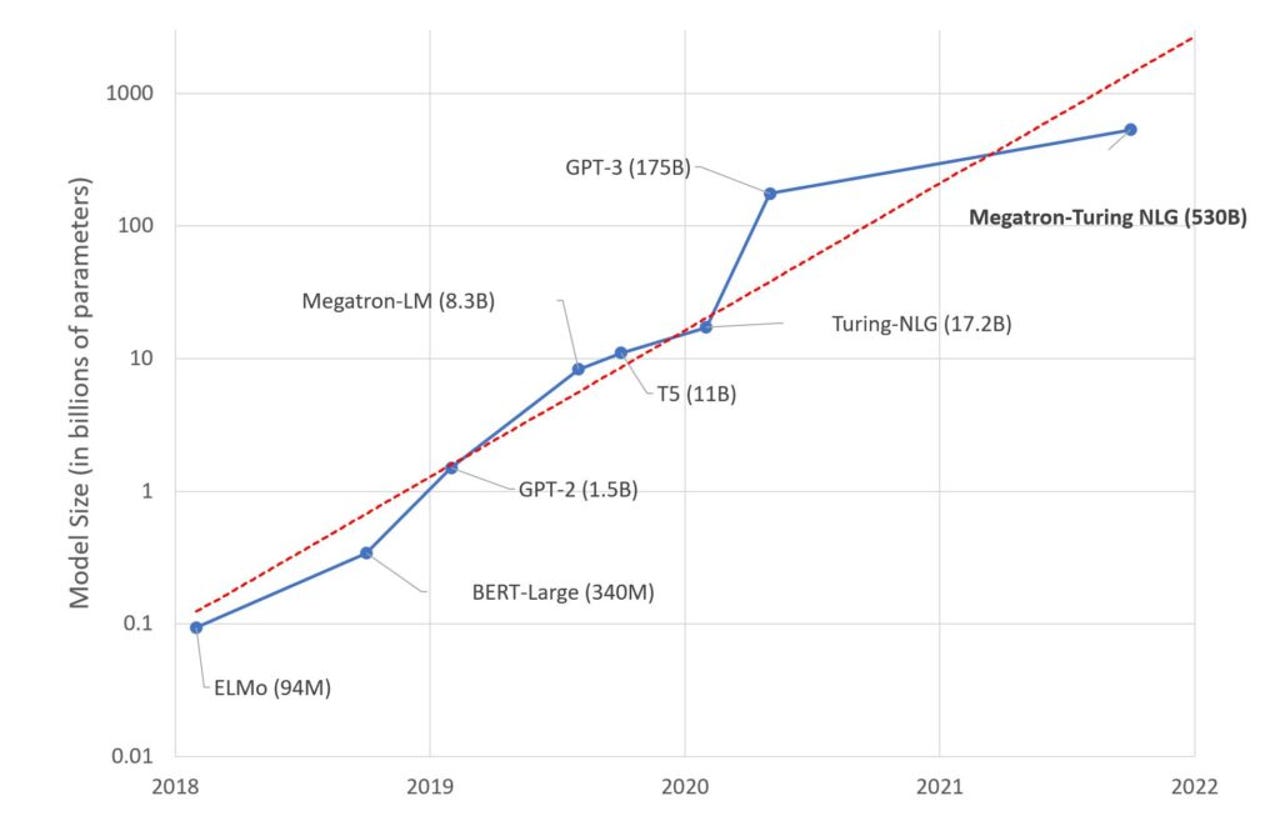

Image: Microsoft Nvidia and Microsoft have teamed up to create the Megatron-Turing Natural Language Generation model, which the duo claims is the "most powerful monolithic transformer language model trained to date".

The AI model has 105 layers, 530 billion parameters, and operates on chunky supercomputer hardware like Selene.

By comparison, the vaunted GPT-3 has 175 billion parameters.

"Each model replica spans 280 NVIDIA A100 GPUs, with 8-way tensor-slicing within a node, and 35-way pipeline parallelism across nodes," the pair said in a blog post.

The model was trained on 15 datasets that contained 339 billion tokens, and was capable of showing how larger models need less training to operate well.

However, the need to operate with languages and samples from the real world meant an old problem with AI reappeared: Bias.

"While giant language models are advancing the state of the art on language generation, they also suffer from issues such as bias and toxicity," the duo said.

"Our observations with MT-NLG are that the model picks up stereotypes and biases from the data on which it is trained. Microsoft and Nvidia are committed to working on addressing this problem.

"Our observations with MT-NLG are that the model picks up stereotypes and biases from the data on which it is trained. Microsoft and Nvidia are committed to working on addressing this problem."

It wasn't so long ago that Microsoft had its chatbot Tay turn full Nazi in a matter of hours by interacting on the internet.

Tags quentes :

Inteligência artificial

Inovação

Tags quentes :

Inteligência artificial

Inovação