Since the release of DALL-E 2 at the end of 2022, text-to-image generators have been all the rage with plenty of worthy competitors entering the market. Now, over a year later, we are at the dawn of a new technology: AI video generation.

On Tuesday, Google Research released a research paper on Lumiere, a text-to-video diffusion model that can create highly realistic video from text prompts and other images.

Also: The best AI image generators of 2024: DALL-E 2 and alternatives

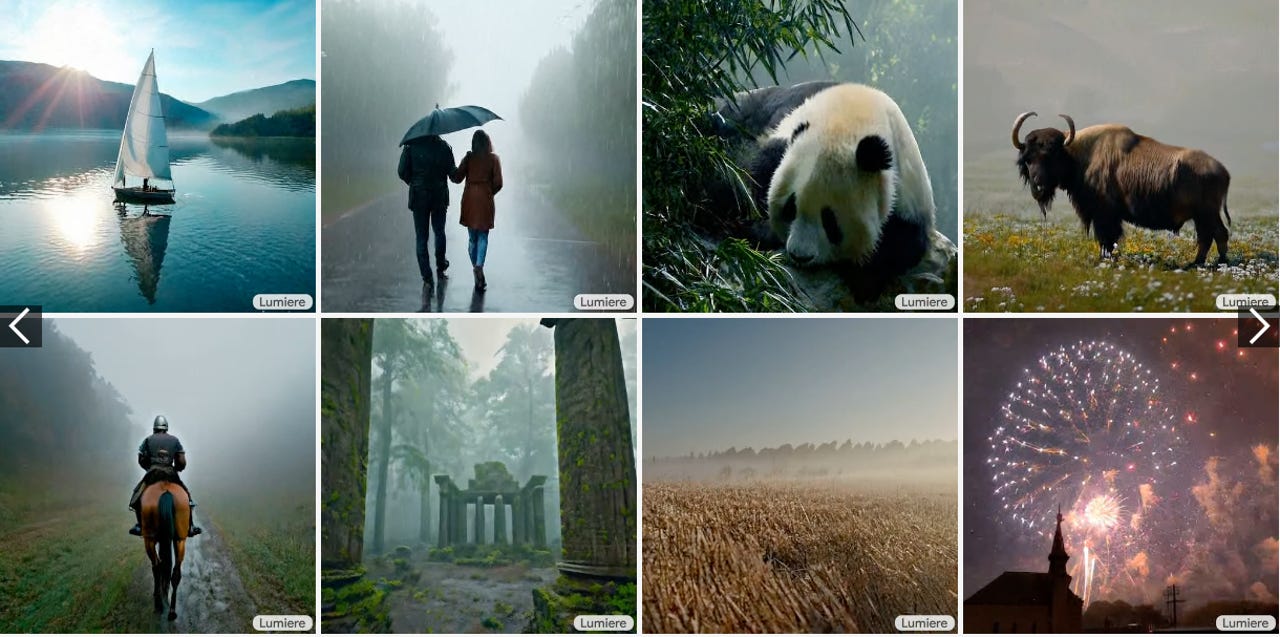

The model was designed to tackle a significant challenge in video generation synthesis, which is creating "realistic, diverse, and coherent motion," according to the paper. You may have noticed video generation models typically render choppy video, but Google's approach delivers a more seamless viewing experience, as seen in the video below.

Not only are the video clips smooth to watch, but they also look hyper-realistic, a significant upgrade from other models. Lumiere can achieve this through its Space-Time U-Net architecture, which generates the temporal duration of a video at once through a single pass.

This method of generating video deviates from other existing models, which synthesize distant keyframes. That approach inherently makes video consistency challenging to achieve, according to the paper.

Lumiere can generate video from different inputs, including text-to-video, which works like a regular image generator and generates a video from a text prompt, and image-to-video, which takes an image and uses its accompanying prompt to bring the photo to life in a video.

The model can also put a fun spin on video generation through stylized generation, which uses a single reference image to generate video in the target style using a user prompt.

In addition to generating video, the model can be used to edit existing video through various visual stylizations that modify a video to reflect a specific prompt, cinemagraphs that animate a specific area of a photo, and inpainting, which fills in missing or damaged areas in the video.

Also: 7 ways AI can fix your meetings, according to Microsoft

In the paper, Google measured Lumiere's performance to other prominent text-to-video diffusion models, including ImagenVideo, Pika, ZeroScope, and Gen2, by asking a group of testers to select the video they deemed better in terms of visual quality and motion, without knowing which model generated each video.

Google's model outperformed the others across all categories, including text-to-video quality, text-to-video text alignment, and image-to-video quality.

The model has yet to be released to the general public; however, if you are interested in learning more or watching the models in action, you can visit the Lumiere website, where you can see plenty of demos of the model performing the different tasks.

Tags quentes :

Inovação

Tags quentes :

Inovação