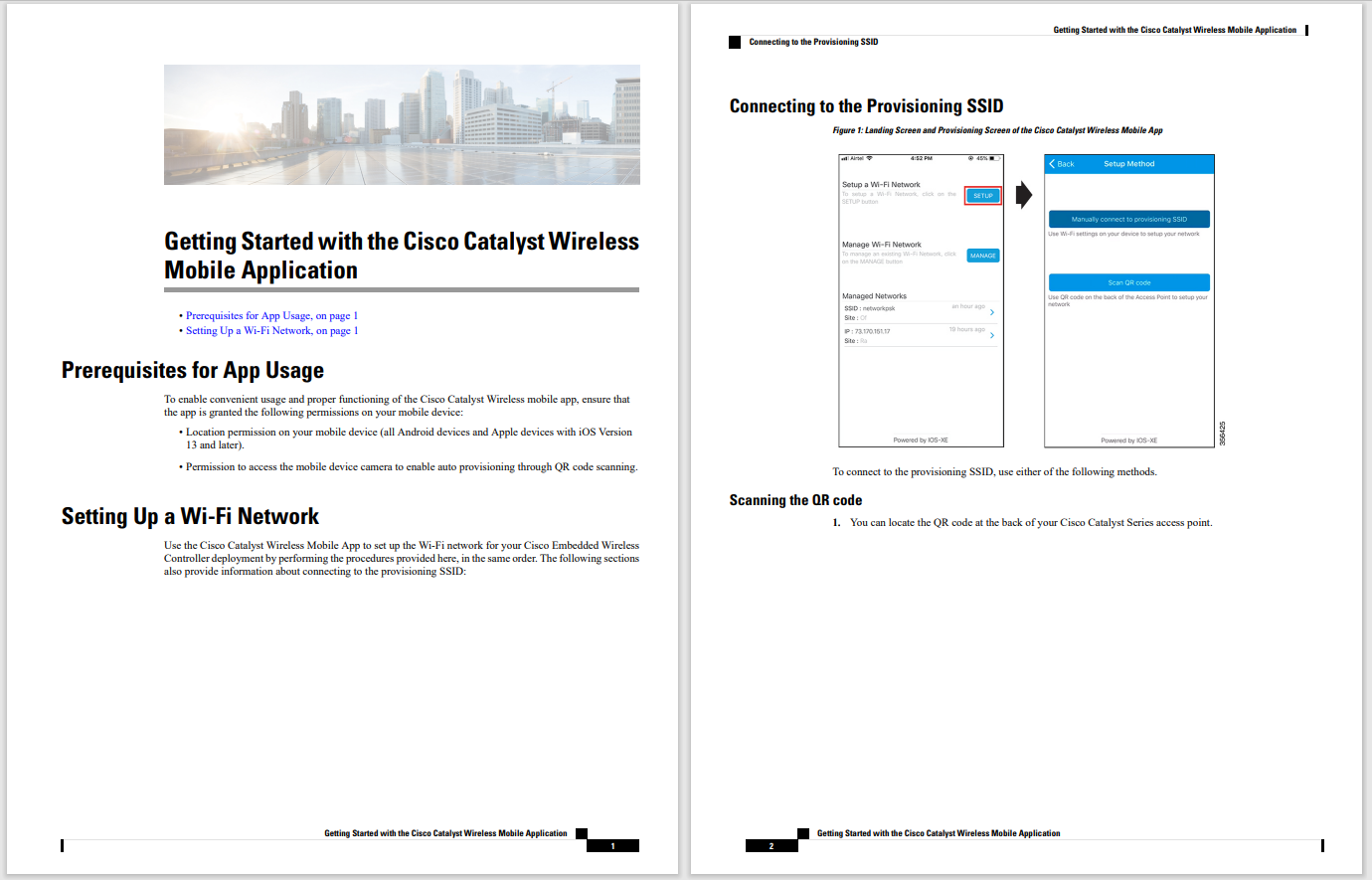

Cerebras co-founder and CEO Andrew Feldman, here seen standing atop packing crates for the CS-2 systems before their installation at the Santa Clara, California hosting facility of partner Colovore.

Photo: Rebecca Lewington/Cerebras SystemsThe fervor surrounding artificial intelligence "isn't a Silicon Valley thing, it isn't even a U.S. thing, it's now all over the world -- it's a global phenomenon," according to Andrew Feldman, co-founder and CEO of AI computing startup Cerebras Systems.

In that spirit, Cerebras on Thursday announced it has contracted to build what it calls "the world's largest supercomputer for AI," named Condor Galaxy, on behalf of its client, G42, a five-year-old investment firm based in Abu Dhabi, the United Arab Emirates.

Also: GPT-4 is getting significantly dumber over time, according to a study

The machine is focused on the "training" of neural networks, the part of machine learning when a neural networks settings, its "parameters," or, "weights," have to be tuned to a level where they are sufficient for the second stage, making predictions, known as the "inference" stage.

Condor Galaxy is the result, said Feldman, of months of collaboration between Cerebras and G42, and is the first major announcement of their strategic partnership.

The initial contract is worth more than a hundred million dollars to Cerebras, Feldman told in an interview. That is going to expand ultimately by multiple times, to hundreds of millions of dollars in revenue, as Cerebras builds out Condor Galaxy in multiple stages.

Also: Ahead of AI, this other technology wave is sweeping in fast

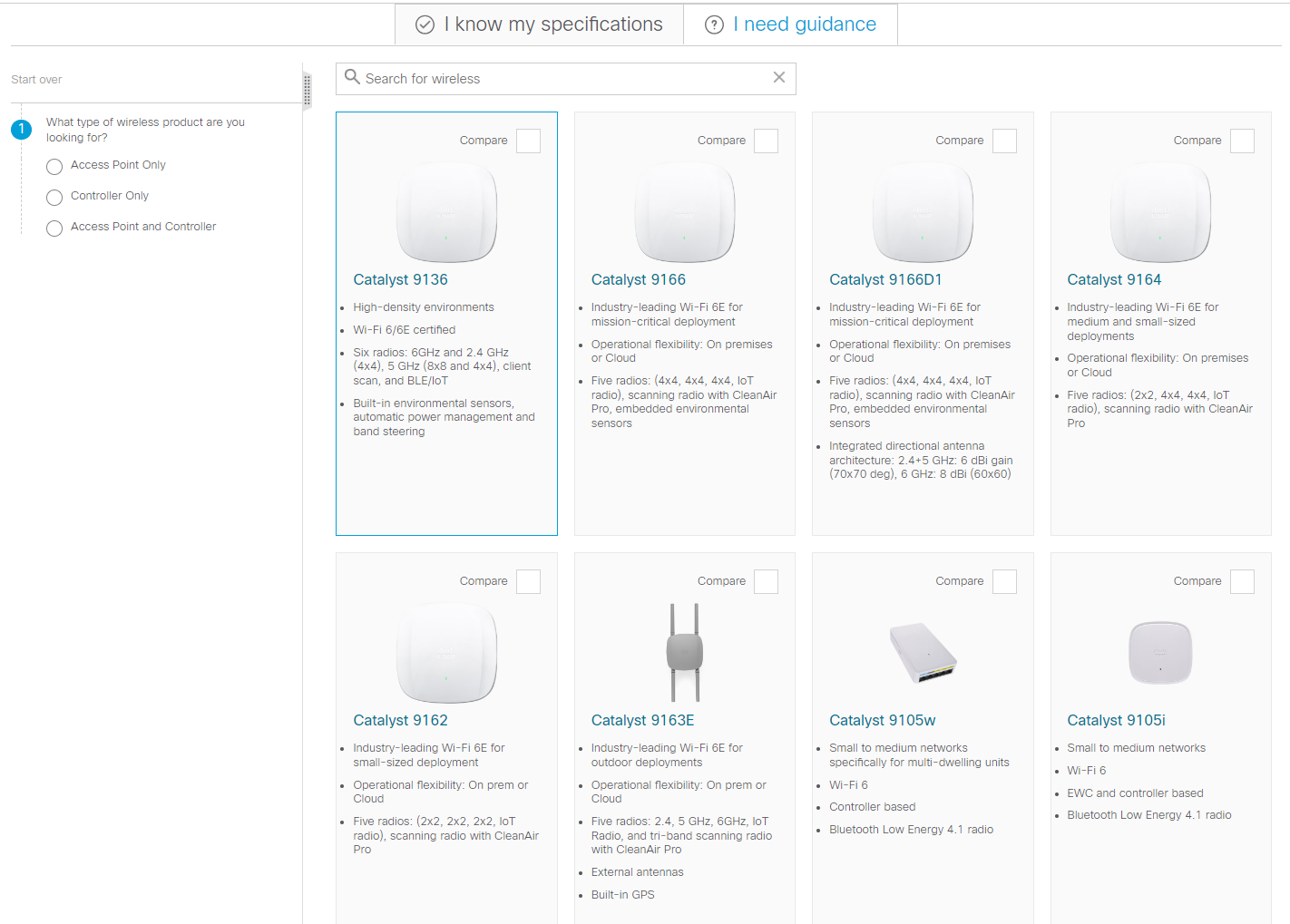

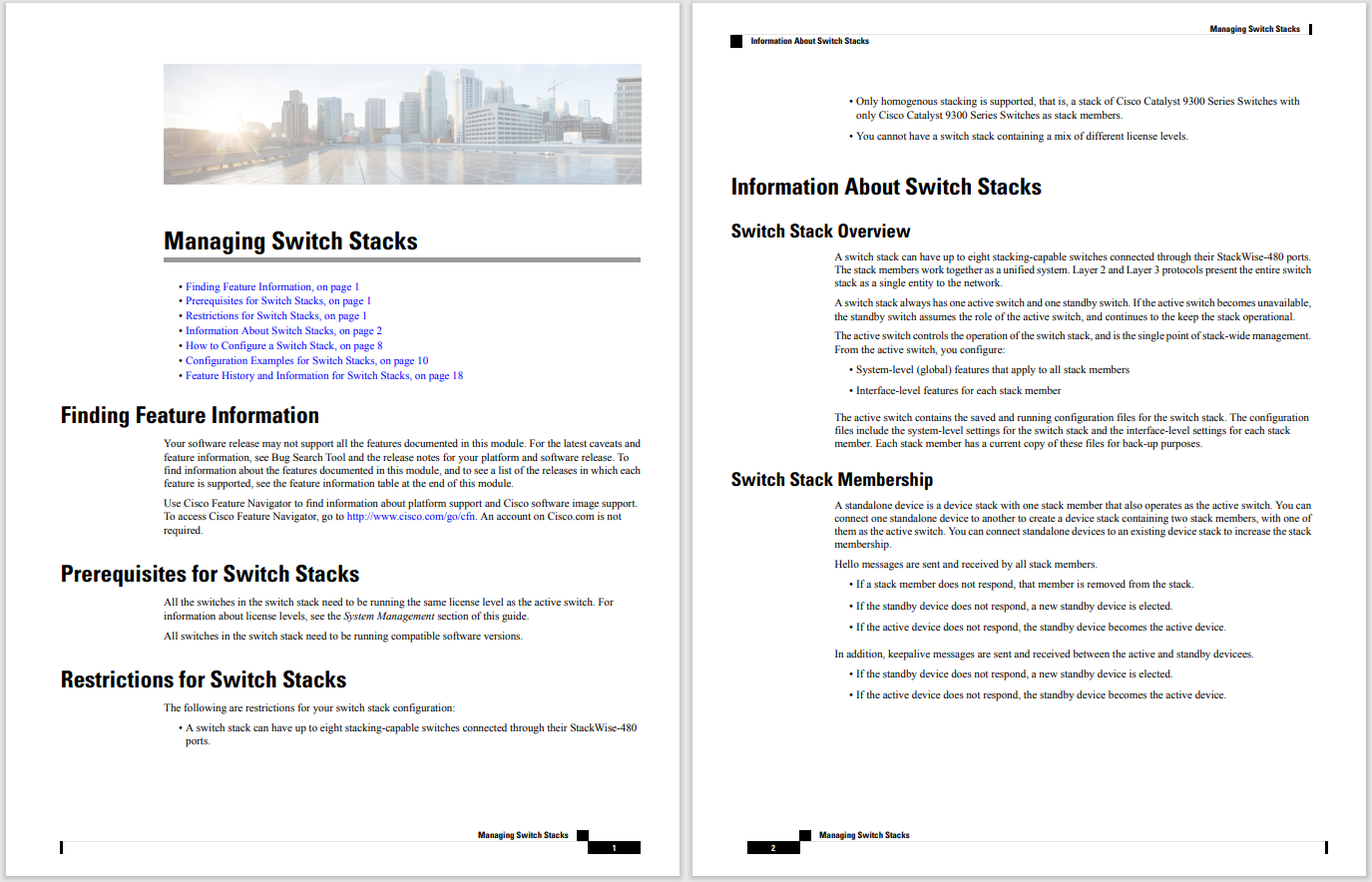

Condor Galaxy is named for a cosmological system located 212 million light years from Earth. In its initial configuration, called CG-1, the machine is made up of 32 of Cerebras's special-purpose AI computers, the CS-2, whose chips, the "Wafer-Scale-Engine," or WSE, collectively hold a total of 27 million compute cores, 41 terabytes of memory, and 194 trillion bits per second of bandwidth. They are overseen by 36,352 of AMD's EPYC x86 server processors.

The 32 CS-2 machines networked together as CG-1.

Rebecca Lewington/ Cerebras SystemsThe machine runs at 2 exa-flops, meaning, it can process a billion billion floating-point operations per second.

The largeness is the latest instance of big-ness by Cerebras, founded in 2016 by seasoned semiconductor and networking entrepreneurs and innovators. The company stunned the world in 2019 with the unveiling of the WSE, the largest chip ever made, a chip taking up almost the entire surface of a 12-inch semiconductor wafer. It is the WSE-2, introduced in 2021, that powers the CS-2 machines.

Also:AI startup Cerebras celebrated for chip triumph where others tried and failed

The CS-2s in the CG-1 are supplemented by Cerebras's special-purpose "fabric" switch, the Swarm-X, and its dedicated memory hub, the Memory-X, which are used to cluster together the CS-2s.

The claim to be the largest supercomputer for AI is somewhat hyperbolic, as there is no general registry for size of AI computers. The common measure of supercomputers, the TOP500 list, maintained by Prometeus GmbH, is a list of conventional supercomputers used for so-called high-performance computing.

Those machines are not comparable, said Feldman, because they work with what's called 64-bit precision, where each operand, the value to be worked upon by the computer, is represented to the computer by sixty-four bits. The Cerebras system represents data in a simpler form called "FP-16," using only sixteen bits for each system.

In 64-bit precision-class machines, Frontier, a supercomputer at the U.S. Department of Energy's Oak Ridge National Laboratory, is the world's most powerful supercomputer, running at 1.19 exa-flops. But it cannot be directly compared to the CG-1 at 2 exa-flops, said Feldman.

Certainly, the sheer compute of CG-1 is unlike many computers on the planet one can think of. "Think of a single computer with more compute power than half a million Apple MacBooks working together to solve a single problem in real time," offered Feldman.

Also: This new technology could blow away GPT-4 and everything like it

The Condor Galaxy machine is not physically in Abu Dhabi, but rather installed at the facilities of Santa Clara, California-based Colovore, a hosting provider that competes in the market for cloud services with the likes of Equinix. Cerebras had previously announced in November a partnership with Colovore for a modular supercomputer named 'Andromeda' to speed up large language models.

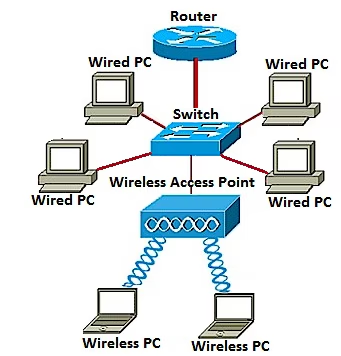

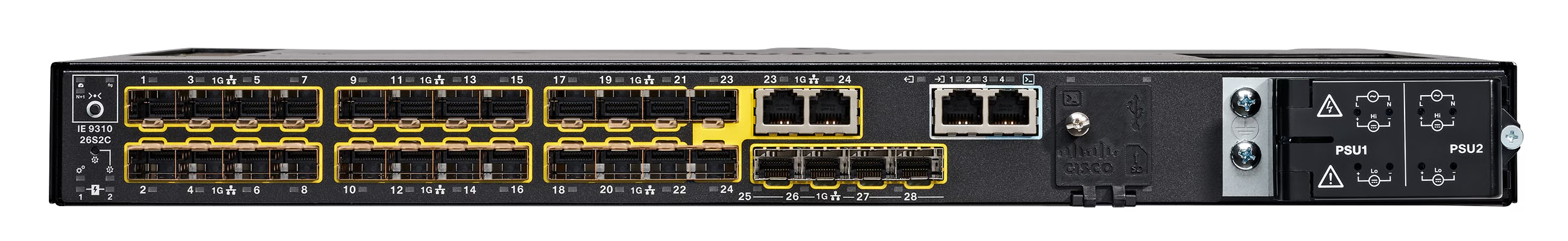

Stats of the CG-1 in phase 1

Cerebras SystemsStats of the CG-1 in phase 2

Cerebras SystemsAs part of the multi-year partnership, Condor Galaxy will scale through version CG-9, said Feldman. Phase 2 of the partnership, expected by the fourth quarter of this year, will double the CG-1's footprint to 64 CS-2s, with a total of 54 million compute cores, 82 terabytes of memory, and 388 teraflops of bandwidth. That machine will double the throughput to 4 exa-flops of compute.

Putting it all together, in phase 4 of the partnership, to be delivered in the second half of 2024, Cerebras will string together what it calls a "constellation" of nine interconnected systems, each running at 4 exa-flops, for a total of 36 exa-flops of capacity, at sites around the world, to make what it calls "the largest interconnected AI Supercomputer in the world."

"This is the first of four exa-flop machines we're building for G42 in the U.S.," explained Feldman, "And then we're going to build six more around the world, for a total of nine interconnected, four-exa-flop machines producing 36 exa-flops."

Also: Microsoft announces Azure AI trio at Inspire 2023

The machine is the first time Cerebras is not only building a clustered computer system but also operating it for the customer. The partnership affords Cerebras multiple avenues to revenue as a result.

The partnership will scale to hundreds of millions of dollars in direct sales to G42 by Cerebras, said Feldman, as it moves through the various phases of the partnership.

"Not only is this contract larger than all other startups have sold, combined, over their lifetimes, but it's intended to grow not just past the hundred million [dollars] it's at now, but two or three times past that," he said, alluding to competing AI startups including Samba Nova Systems and Graphcore.

In addition, "Together, we resell excess capacity through our cloud," meaning, letting other customers of Cerebras rent capacity in CG-1 when it is not in use by G42. The partnership "gives our cloud a profoundly new scale, obviously," he said, so that "we now have an opportunity to pursue dedicated AI supercomputers as a service."

Also: AI and advanced applications are straining current technology infrastructures

That means whoever wants cloud AI compute capacity will be able to "jump on one of the biggest supercomputers in the world for a day, a week, a month if you want."

The ambitions for AI appear to be as big as the machine. "Over the next 60 days, we're gonna announce some very, very interesting models that were trained on CG-1," said Feldman.

G42 is a global conglomerate, Feldman notes, with about 22,000 employees, in twenty-five countries, and with nine operating companies under its umbrella. The company's G42 Cloud subsidiary operates the largest regional cloud in the Middle East.

"G42 and Cerebras' shared vision is that Condor Galaxy will be used to address society's most pressing challenges across healthcare, energy, climate action and more," said Talal Alkaissi, CEO of G42 Cloud, in prepared remarks.

Also: Nvidia sweeps AI benchmarks, but Intel brings meaningful competition

A joint venture between G42 and fellow Abu Dhabi investment firm Mubadala Investments. Co., M42, is one of the largest genomics sequencers in the world.

"They're sort-of pioneers in the use of AI and healthcare applications throughout Europe and the Middle East," noted Feldman of G42. The company has produced 300 AI publications over the past 3 years.

"They [G42] wanted someone who had experienced building very large AI supercomputers, and who had experience developing and implementing big AI models, and who had experience manipulating and managing very large data sets," said Feldman, "And those are all things we, we had, sort-of, really honed in the last nine months."

The CG-1 machines, Feldman emphasized, will be able to scale to larger and larger neural network models without incurring many times the additional amount of code needed.

"One of the key elements in of the technology is that it enables customers like G42, and their customers, to, sort-of, quickly gain benefit from our machines," said Feldman.

Also: AI will change software development in massive ways

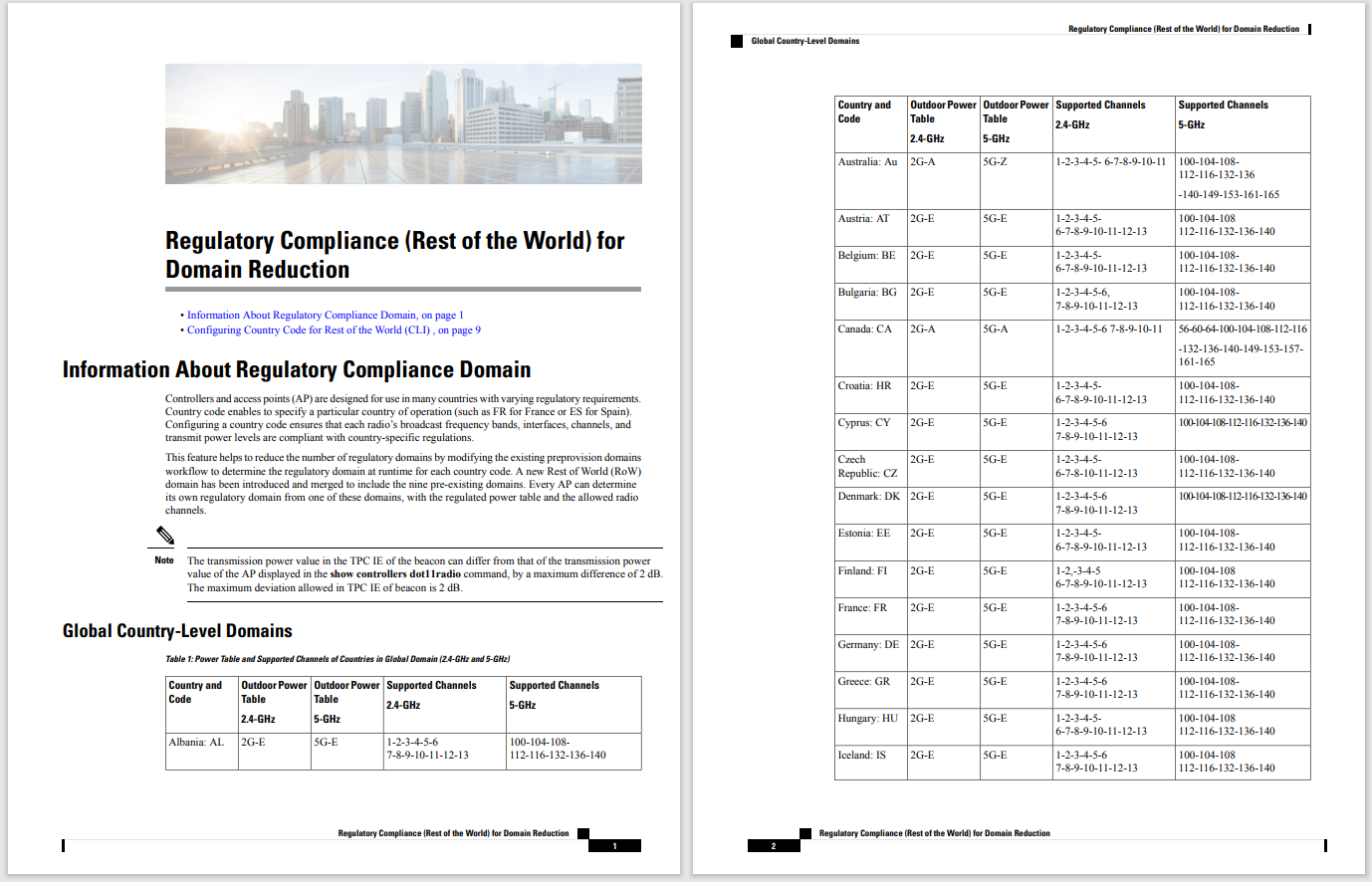

In a slide presentation, he emphasized how a 1-billion-parameter neural network such as OpenAI's GPT, can be put on a single Nvidia GPU chip with 1,200 lines of code. But to scale the neural network to a 40-billion parameter model, which runs across 28,415 Nvidia GPUs, the amount of code required to be deployed balloons to almost 30,000 lines, said Feldman.

For a CS-2 system, however, a 100-billion-parameter model can be run with the same 1,200 lines of code.

Cerebras claims it can scale to larger and larger neural network models with the same amount of code versus the explosion in code required to string together Nvidia's GPUs.

Cerebras Systems"If you wanna put a 40-billion or a hundred-billion parameter, or a 500-billion parameter, model, you use the exact same 1,200 lines of code," explained Feldman. "That is really a core differentiator, is that you don't have to do this," write more code, he said.

For Feldman, the scale of the latest creation represents not just bigness per se, but an attempt to have qualitatively different results by scaling up from the largest chip to the largest clustered systems.

Also: MedPerf aims to speed medical AI while keeping data private

"You know, when we started the company, you think that you can help change the world by building cool computers," Feldman reflected. "And over the course of the last seven years, we built bigger and bigger and bigger computers, and some of the biggest.

"Now we're on a path to build, sort of, unimaginably big, and that's awesome, to walk through the data center and to see rack after rack of your gear humming."

Tags quentes :

Inteligência artificial

Inovação

Tags quentes :

Inteligência artificial

Inovação