For a while now, those who track robotics development have taken note of a quiet revolution in the sector. While self-driving cars have grabbed all the headlines, the work happening at the intersection of AI, machine vision, and machine learning is fast becoming the foundation for the next phase of robotics.

By combining machine vision with learning capabilities, roboticists are opening a wide range of new possibilities like vision-based drones, robotic harvesting, robotic sorting in recycling, and warehouse pick and place. We're finally at the inflection point: The moment where these applications are becoming good enough to provide real value in semi-structured environments where traditional robots could never succeed.

To discuss this exciting moment and how it's going to change the world we live in fundamentally, I connected with Pieter Abbeel, a professor of electrical engineering and computer science at the University of California, Berkeley, where he is also the director of the Berkeley Robot Learning Lab and co-director of the Berkeley AI Research lab. He is co-founder and Chief Scientist of Covariant and host of the excellent The Robot Brains podcast.

In other words, he's got robotics bona fides, and what he says about the near future of automation is nothing short of astounding.

GN: You call AI Robotics a quiet revolution. Why is it revolutionary, and why do you think recent developments are still under the radar, at least in popular coverage?

For the past sixty years, we've had physically highly capable robots. However, they just weren't that smart. So these physically highly capable robots ended up constrained to factories -- mostly car and electronics factories -- where they were trusted to execute carefully pre-programmed motions. These robots are very reliable at doing the same thing over and over. They create value, but it's barely scratching the surface of what robots could do with better intelligence.

The quiet revolution is occurring in the area of artificial intelligence (AI) Robotics. AI robots are empowered with sophisticated AI models and vision. They can see, learn, and react to make the right decision based on the current situation.

Popular coverage of robotics trends towards home-butler style robots and self-driving cars because they're very relatable to our everyday lives. Meanwhile, AI Robotics is taking off in areas of our world that are less visible but critical to our livelihoods -- think e-commerce fulfillment centers and warehouses, farms, hospitals, recycling centers. All areas with a big impact on our lives, but not activities that the average person is seeing or directly interacting with daily.

GN: Semi-structured environments are sort of the next frontier for robots, which have traditionally been confined to structured settings like factories. Where are we going to see new and valuable robotics deployments in the next year or so?

The three big ones I anticipate are warehouse pick and pack operations, recycling sortation, and crop harvesting/care. From a technological point of view, these are naturally in the striking range of recent AI developments. And also, I know people working on AI Robotics in each of those industries, and they are making great strides.

GN: Why is machine vision one of the most exciting areas of development in robotics? What can robots now do that they couldn't do, say, five years ago?

Traditional robotic automation relied on very clever engineering to allow pre-programmed-motion robots to be helpful. Sure, that worked in car and electronics factories, but ultimately it's very limiting.

Giving robots the gift of sight completely changes what's possible. Computer Vision, the area of AI concerned with making computers and robots see, has undergone a night-and-day transformation over the past 5-10 years -- thanks to Deep Learning. Deep Learning trains large neural networks (based on examples) to do pattern recognition; in this case, pattern recognition enables understanding of what's where in images. And then Deep Learning, of course, is providing capabilities beyond seeing. It allows for robots to also learn what actions to take to complete a task, for example, picking and packing an item to fulfill an online order.

GN: A lot of coverage over the past decade has focused on the impact of sensors on autonomous systems (lidar, etc.). How is AI reframing the conversation in robotics development?

Before Deep Learning broke onto the scene, it was impossible to make a robot "see" (i.e. understand what's in an image). Consequently, in the pre-Deep Learning days, a lot of energy and cleverness went into researching alternative sensor mechanisms. Lidar is indeed one of the popular ones (how it works is that you send a laser beam out, measure how long it takes to get reflected, and then multiply by the speed of light to determine the distance to the nearest obstacle in that direction). Lidar is wonderful when it works, but the failure modes can't be discounted (e.g., Does the beam always make it back to you? Does it get absorbed by a black surface? Does it go right through a transparent surface? etc..).

But in a camera image, we humans can see what's there, so we know the information has been captured by the camera; we just need a way for the computer or robot to be able to extract that same information from the image. AI advances, specifically Deep Learning, have completely changed what's possible in that regard. We're on a path to build AI that can interpret images as reliably as humans can, as long as the neural networks have been shown enough examples. So there is a big shift in robotics from focusing on inventing dedicated sensory devices to focusing on building the AI that can learn and empower our robots using the natural sensory inputs already available to us, especially cameras.

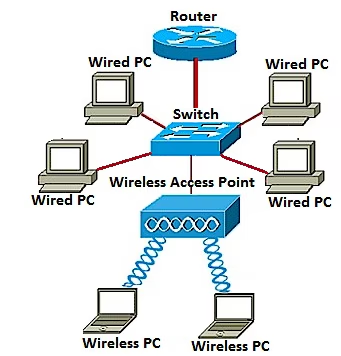

GN: Robotics has always been a technology of confluences. In addition to AI and machine vision, what technologies have converged to make these deployments possible?

Indeed, any robotic deployment requires a confluence of many great components and a team that knows how to make them all work together. Besides AI there is, of course, the long-existing technology of reliable industrial-grade manipulator robots. And, crucially, there are cameras and computers, which are ever becoming better and cheaper.

GN: What's going to surprise people about robots over the next five years?

The magnitude at which robots are contributing to our everyday lives, most often without seeing any of these robots. Indeed, we likely won't personally see the robots physically interacting with the things we use every day, but there will be a day soon in which the majority of the items in our household were touched by a robot at least once before reaching us.

Tags quentes :

Inovação

Robótica

Tags quentes :

Inovação

Robótica