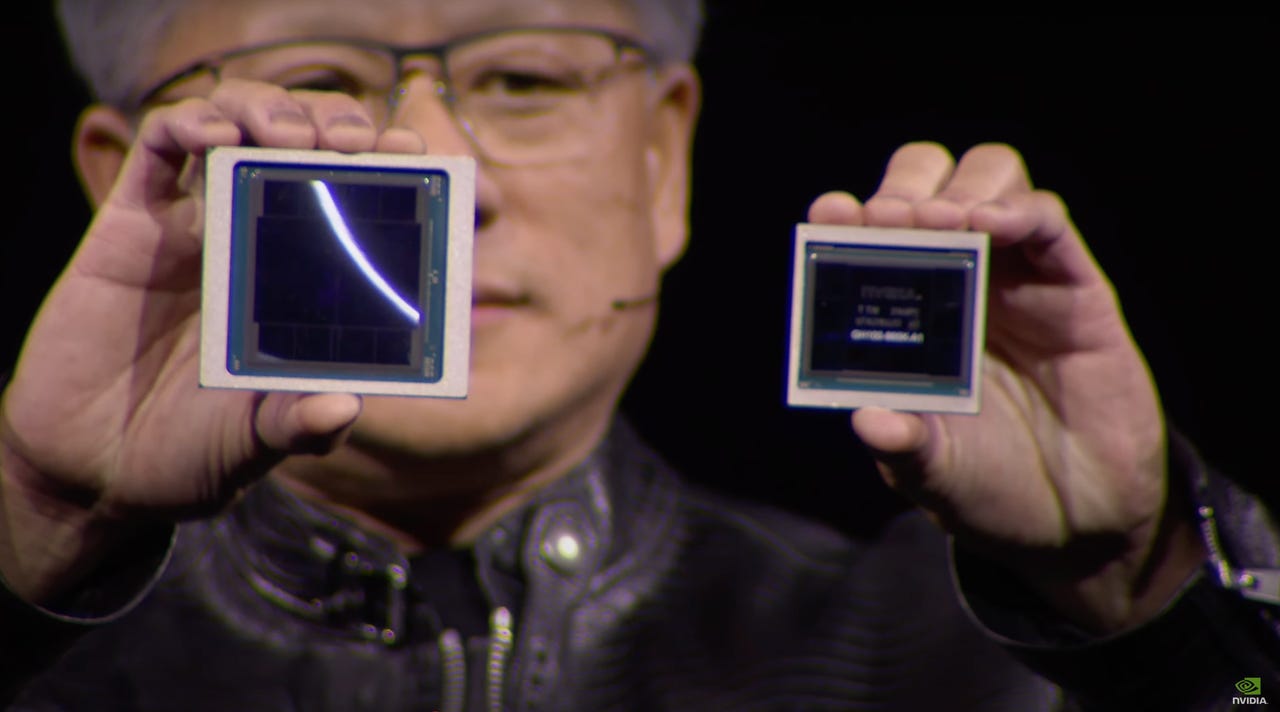

Nvidia co-founder and CEO Jensen Huang held up the new Blackwell GPU chip, left, to compare to its predecessor, H100, "Hopper."

Nvidia CEO Jensen Huang on Monday presided over the AI chipmaker's first technology conference held in person since the COVID-19 pandemic, the GPU Technology Conference, or GTC, in San Jose, California, and unveiled the company's new design for its chips, code-named "Blackwell."

Many consider GTC to be the "Woodstock of AI" or the "Lalapalooza of AI." "I hope you realize this is not a concert," Huang said following big applause at the outset. He called out the vast collection of partners and customers in attendance.

"Michael Dell is sitting right there," Huang said, noting the Dell founder and CEO was in the audience.

Also:AI startup Cerebras unveils the WSE-3, the largest chip yet for generative AI

Huang emphasized the scale of computing required for training large language models of generative AI, or, GenAI. A model that has trillions of parameters, combined with training data that is trillions of "tokens," or word-parts, would require "30 billion quadrillion floating point operations," or 30 billion petaFLOPS, Huang noted. "If you had a petaFLOP GPU, you would need 30 billion seconds to go compute, to go train that model -- 30 billion seconds is approximately 1,000 years."

"I'd like to do it sooner, but it's worth it -- that's usually my answer," Huang quipped.

Huang opened his presentation with an overview of the increasing size of AI workloads, noting that the most powerful chips would spend 30 billion seconds, or 1,000 years to train.

Nvidia's H100 GPU, the current state-of-the-art chip, delivers on the order of 2,000 trillion floating-point operations per second, or, 2,000 TFLOPS. A thousand TFLOPS is equal to one petaFLOP, ergo, the H100, and its sibling, H200, can manage only a couple of petaFLOPS, far below the 30 billion to which Huang referred.

Also:Making GenAI more efficient with a new kind of chip

"What we need are bigger GPUs -- we need much, much bigger GPUs," he said.

Blackwell, known in the industry as "HopperNext," can perform 20 petaFLOPS per GPU. It is meant to be delivered in an 8-way system, an "HGX" circuit board of the chips.

Using "quantization," a kind of compressed math where each value in a neural network is represented using fewer decimal places, called "FP4," the chip can run as many as 144 petaFLOPs in an HGX system.

The chip has 208 billion transistors, Huang said, using a custom semiconductor manufacturing process at Taiwan Semiconductor Manufacturing known as "4NP." That is more than double the 80 billion in Hopper GPUs.

The Nvidia Blackwell GPU multiplies ten-fold the number of floating-point math operations per second and more than doubles the number of transistors from the predecessor "Hopper" series. Nvidia notes the ability of the chip to run large language models 25 times faster.

Blackwell can run large language models of generative AI with a trillion parameters 25 times faster than prior chips, Huang said.

Also:For the age of the AI PC, here comes a new test of speed

The chip is named after David Harold Blackwell, who, Nvidia relates, was "a mathematician who specialized in game theory and statistics, and the first Black scholar inducted into the National Academy of Sciences."

The Blackwell chip makes use of a new version of Nvidia's high-speed networking link, NVLink, which delivers 1.8 terabytes per second to each GPU. A discrete part of the chip is what Nvidia calls a "RAS engine," to maintain "reliability, availability and serviceability" of the chip. A collection of decompression circuitry improves performance of things such as database queries.

Amazon Web Services, Dell, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI are among Blackwell's early adopters.

Like its predecessors, two Blackwell GPUs can be combined with one of Nvidia's "Grace" microprocessors to produce a combined chip, called the "GB200 Grace Blackwell Superchip."

Like its predecessor Hopper GPUs, two Blackwell GPUs can be combined with one of Nvidia's "Grace" microprocessors to produce a combined chip, called the "GB200 Grace Blackwell Superchip."

Thirty-six of the Grace and 72 of the GPUs can be combined for a rack-based computer Nvidia calls the "GB200 NVL72" that can perform 1,440 petaFLOPS, getting closer to that billion petaFLOPs Huang cited.

A new system for the chips, the DGX SuperPOD, combines "tens of thousands" of the Grace Blackwell Superchips, boosting the operations per second even more.

Also:Nvidia boosts its 'superchip' Grace-Hopper with faster memory for AI

Alongside Blackwell, Nvidia made several additional announcements:

A high-res earth image simulation from a "digital twin" simulation of extreme weather conditions, called Earth-2 climate, intended to "deliver warnings and updated forecasts in seconds compared to the minutes or hours in traditional CPU-driven modeling." The technology is based on a generative AI model built by Nvidia called "CorrDiff," which can generate "12.5x higher resolution images" of weather patterns "than current numerical models 1,000x faster and 3,000x more energy efficiently." The Weather Company is an initial user of the technology.

Also:How Apple's AI advances could make or break the iPhone 16

In addition to the product and technology announcements on its own, Nvidia announced several initiatives with partners:

More news can be found in the Nvidia newsroom.

You can catch the entire keynote address on replay on YouTube.

Tags quentes :

Inovação

Tags quentes :

Inovação